Cloudflare outage: another wake-up call for resilience planning

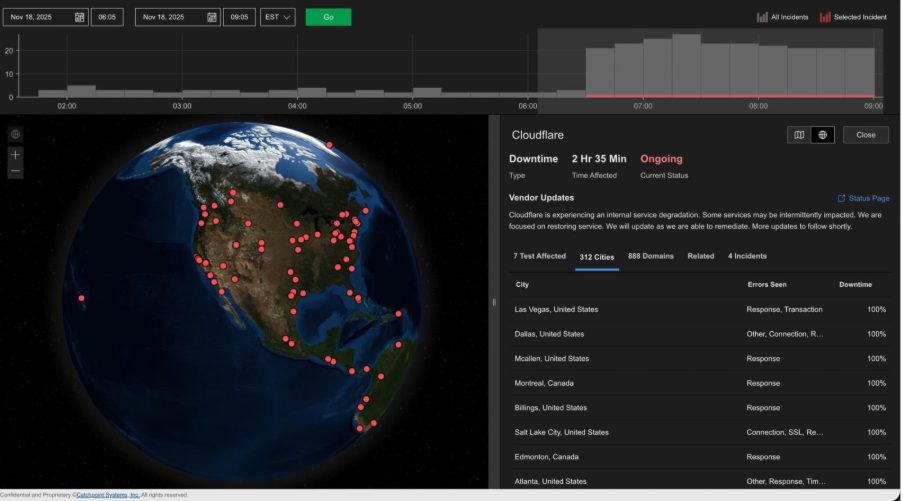

Another day, another massive Internet disruption, and this time it’s Cloudflare taking huge parts of the Internet offline.

This incident is not an anomaly. It is part of a recurring pattern that has become standard in digital infrastructure.

We have reached an inflection point in digital operations. Outages at major cloud and content delivery network (CDN) providers are now expected. The only real uncertainty is when it will happen next. More importantly, the most important question is: what are you doing about it?

The dangerous illusion of invincibility

Organizations continue to architect their entire digital presence around a belief that cloud providers or cloud services (like CDNs) will just work. Then they act surprised when that provider goes down and takes everything else with it.

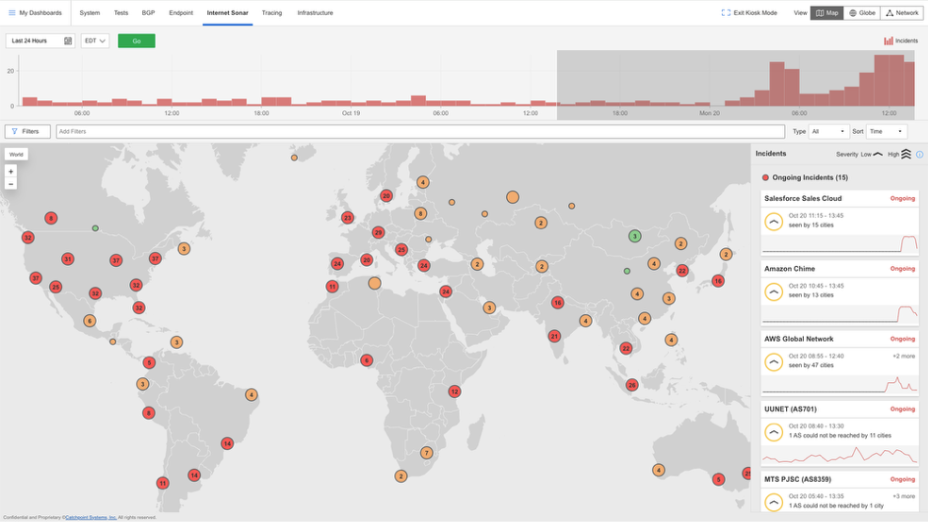

Take a look at the failures we have witnessed just recently. In July 2025, Cloudflare went down again. On October 20, it was AWS’s turn. Fortnite and Roblox players were suddenly locked out. Duolingo learners watched their streaks disappear. Snapchat’s 400 million daily users couldn’t send messages or view stories. Even Eight Sleep mattresses malfunctioned: some overheated or got stuck upright when their cloud connections failed.

Just nine days later, on October 29, 2025, Microsoft Azure’s Front Door CDN had a global outage that lasted more than eight hours. The result was widespread latency, timeouts, and errors across Microsoft 365, Outlook, Teams, Xbox Live, and many websites hosted on Azure – as well as corporate applications which had a component (an API, cloud service, or authentication service) hosted on Azure.

None of these outages are isolated events. They are evidence that cloud service failures should be expected problem in the infrastructure powering our connected world.

Just because a server runs on a cloud does not mean there is some magic pixie dust that protects it from failing, misconfigurations, human error, failed updates, or their own dependencies.

The reality is that we live in a world where digital systems are critical for our daily lives, and those systems are complex, interconnected, distributed, and interdependent. There is no cloud magic that protects systems just because they are hosted in the cloud.

The problem isn't just technical. It's a mindset.

Recently, I spoke with someone who said:

"I'm on Cloudflare. I don’t need anything else, I don’t need monitoring."

That sentiment expresses the heart of the issue. Why do we keep experiencing these massive internet disruptions? The answer is not just technical. The real problem is a mindset. There is a belief that using Cloudflare, AWS, Azure, or any major platform somehow makes companies immune to failure. When people rely on a "trusted" provider, they may feel justified in skipping resilience planning.

This way of thinking must go.

CDNs have been critical for performance acceleration since the late 1990s. They reduce latency, absorb traffic spikes (and DDoS attacks), and cache content closer to users. Somewhere along the line, though, we stopped thinking of them as tools and started believing they were flawless gatekeepers. When that happens, vulnerability creeps in.

Even the best CDNs have outages. DNS delays, BGP leaks, TLS misconfigurations, and, in Cloudflare’s case, an oversized threat management file can all result in prolonged downtime. If you are locked into a single CDN without a fallback plan, there is nowhere left to redirect your traffic when disaster strikes.

Multi-cloud contradiction

Here’s an ironic twist. Enterprises invest heavily in multi-cloud strategies. They spread compute, storage, and databases across AWS, Azure, and Google Cloud, investing in complex architecture and ongoing management. Yet, for the CDN layer that actually connects users to their apps, they usually choose one vendor.

Think about it. No should run critical backend systems in just one availability zone. Why route all traffic through just one CDN? It’s like installing a world-class security system and then leaving the front door locked with a basic lock.

The impact is not simply about downtime. CDN outages frustrate users, erode brand credibility, and can negatively affect revenue and customer trust in seconds.

When your CDN is also your eyes

The risk increases when companies depend on a single CDN not only for critical delivery but also for performance monitoring and incident visibility. It’s like having your accountant handle payroll, bookkeeping, and audit at the same time. While convenient, this approach means no independent oversight.

When AWS’s massive outage struck, it didn’t just take down cloud services, apps, and enterprise platforms. It also knocked out many of the monitoring systems organizations depend on for real-time answers. Observability companies, including Datadog, New Relic, Checkly, Dynatrace, SpeedCurve, and Splunk Observability, lost visibility or functionality precisely when organizations needed them most.

There is a very simple principle to follow: Your monitoring must be independent from the system being monitored.

Redundancy and Resilience: Not just insurance, but strategy

Adopting a multi-vendor architecture is not just an emergency backup. It is a sensible strategy that drives real business value in performance, cost, and control.

Here’s why:

- Regional Performance Optimization: Different CDN providers excel in different regions. One may dominate in Europe. Another is best for Southeast Asia. Why limit yourself to a single provider’s strengths? Use the best performer for each geography.

- Vendor Leverage and Cost Optimization: Multiple providers mean you retain bargaining power. If your CDN’s price goes up or service falters, you have the flexibility to move workloads. Being able to shift traffic also encourages competitive pricing.

- Feature Flexibility: Providers specialize in varied areas—edge compute, video delivery, TLS offload, security features. A multi-CDN approach lets you choose the right platform for each job.

- Resilience Through Redundancy: Automated failover and smart routing help multi-CDN systems maintain service, even during major outages. If one provider goes down, traffic shifts instantly, often before users notice. Businesses using robust multi-CDN setups reach five nines (99.999%) reliability, or less than six minutes downtime per year, compared with eight or more hours with just one vendor.

Intelligent traffic steering: When traffic is critical, implementing traffic steering brings not only resilience, but also the ability to make dynamic adjustments in real-time to optimize for user experience and cost optimization. A multi-vendor strategy is not foolproof. It can fail (will fail) unless you have the right visibility and the right processes to react as needed. At catchpoint, we are Cloudflare accounts, but we also have back up accounts. Having a second, backup CDN in a cloud is not that hard.

Even with a single vendor, some of the best IT teams today took advantage of proper planning and visibility to become aware of the problem immediately and switch traffic away from the failing service in seconds – while other teams continued to have failures for hours.

Building resilience: a practical framework

Resilience does not arise by itself. It requires deliberate architectural choices and operational discipline. It needs to become a business priority that is taken seriously.

- Establish a Chief Resilience Officer position. It’s time for companies to take this seriously and appoints a CRO that reports to the board. It needs to be an independent position with power to make the right investments and changes to minimize the risk to the business.

- Become aware of your dependencies. And the dependencies of your dependencies. When Azure went down, Docusign went down too. This is a very serious thing for real estate businesses for example, and most likely a dependency they were not aware of.

- Plan for failure. Chaos engineering was invented in 2010 by Netflix when the cloud was a new thing. It was built on the idea of defining a steady state for a system, being aware of how a system could fail, designing how the system should respond to failure, and then running real-world controlled experiments to test for resilience.

- Design Redundancy for every dependency: Eliminate single points of failure. Be prepared for any component of the system to fail. Distribution should be strategic, reflecting workload, geography, and risk.

- Create runbooks for every possible failure, It is important to document the process and desired actions when incidents happen based on their scope and severity.

- Test Failover Routinely: Simulate outages on a regular schedule. Confirm that both systems and staff respond well in emergencies. Practice makes sure no critical step is overlooked when customers depend on you.

- Establish Independent Visibility: Use monitoring tools that give you independent visibility into every dependency and can give you the ability to react quickly, minimizing impact to the business.. Don’t rely only on vendor dashboards. Independent validation is key.

- Understand Global and Local Performance: Users in different regions can have wildly different experiences. Service outages can be regional. Performance variations can become meaningful quickly. Monitor from diverse global vantage points to understand the real-world user experience – not only global uptime metrics.

The path forward

Outages are unavoidable. You will experience a service outage soon. The question only question is when. But confusion, paralysis, and uncertainty do not have to be the result.

This discussion is not about moving away from Cloudflare, AWS, Azure, or any major platform – even the best vendors suffer incidents, it’s the nature of technology. These providers offer immense capability and real business value. The real issue is building resilience, not relying on hope or luck.

Redundancy is no longer optional. It is where resilience starts.

p.s. you may be interested in the 2025 Internet Resilience Report, it’s an insightful read (no registration required)

Summary

Cloudflare’s latest outage, like those on AWS and Azure before it, shows outages aren’t a surprise, but an expectation. Relying on a single CDN is a recipe for disaster. True resilience demands multi-CDN strategies, routine failover testing, independent performance monitoring, and a mindset shift away from convenience toward preparedness. Outages are normal; chaos is optional.