Cloudflare’s Resolver Outage: More Than Just DNS

UPDATE 7/16/2025 - Cloudflare posted a detailed post-mortem analysis about their incident here.

TL;DR: A misconfiguration in Cloudflare’s internal systems accidentally linked the 1.1.1.1 IP prefixes to a non-production service topology. When a test location was added, it triggered a global withdrawal of those prefixes from Cloudflare’s data centers - eventually causing the outage.

The hijack detected was not the cause of the outage, but only a latent unrelated issue that surfaced due to the routes being withdrawn.

Catchpoint applauds Cloudflare’s commitment to transparency - sharing detailed insights into this incident not only reinforces trust but also helps the entire Internet community learn and improve.

“It’s always DNS.”

That’s the running joke in IT. When websites won’t load and apps grind to a halt, DNS—the internet’s address book—is often the first to get blamed. That’s because DNS translates human-friendly names like google.com into IP addresses that computers use to route traffic.

Millions of users rely on public resolvers like Cloudflare’s 1.1.1.1, Google’s 8.8.8.8, or Quad9 because they’re usually faster and more reliable than what their ISPs provide.

So, when 1.1.1.1 went dark on July 14, 2025, millions were cut off from the web. Reddit lit up, ISPs got blamed, and users rebooted routers to no avail. But this time? It wasn’t DNS —at least, not directly.

Outage overview: what really happened to 1.1.1.1?

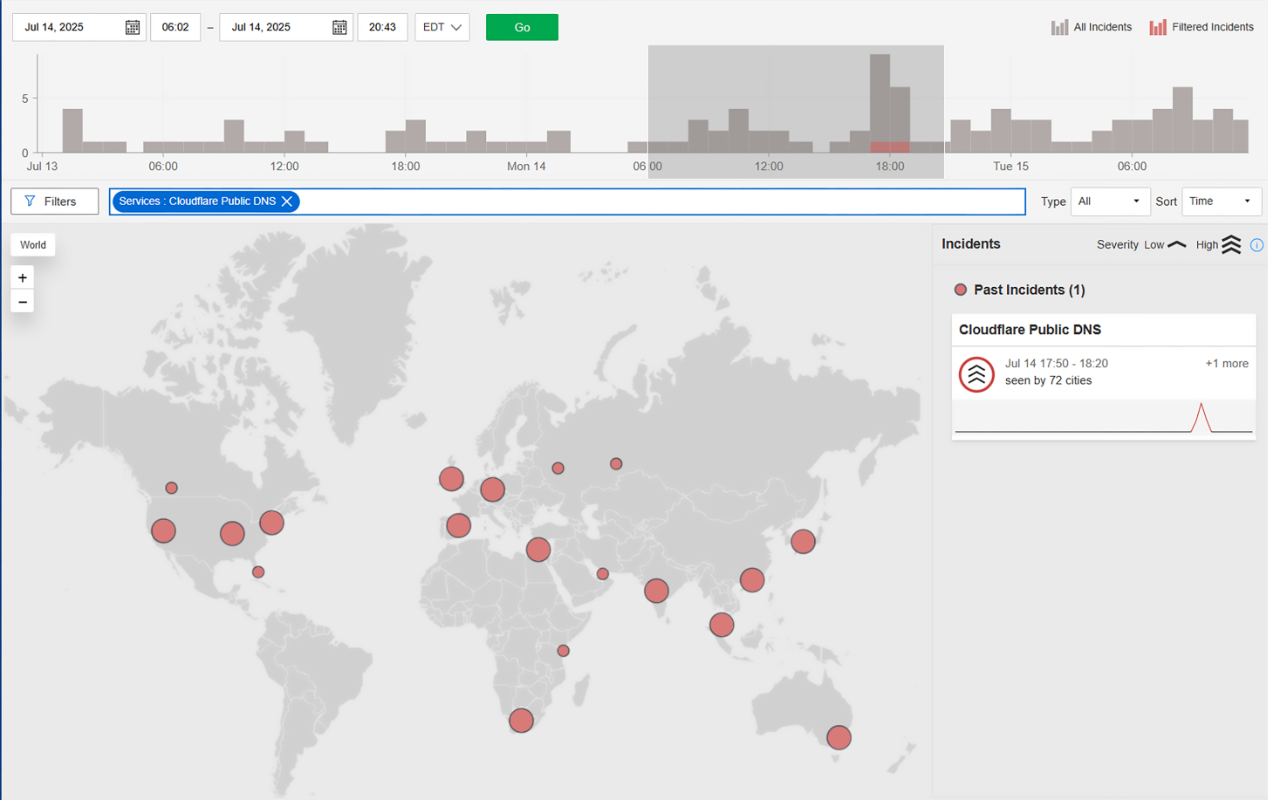

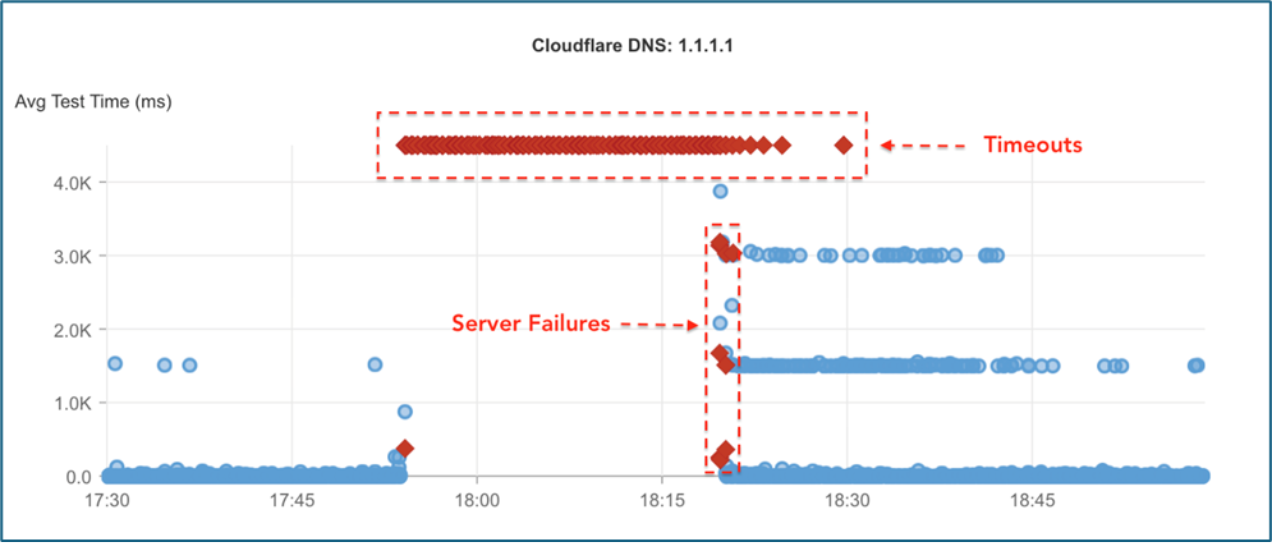

At 17:50 EDT on July 14, Catchpoint’s Internet Sonar detected a sharp and sudden spike in DNS query failures targeting Cloudflare’s public resolver, 1.1.1.1.

From every angle, it looked like a DNS outage: query timeouts, and toward the end of the incident, ServFail errors.

But the underlying cause wasn’t a malfunctioning DNS server—it was a BGP route hijack.

BGP hijack explained: How Tata took 1.1.1.1 off the map

Behind the scenes, the disruption was not caused by DNS failures themselves—but by internet routes being tampered with. During the outage time, we witnessed a mass withdrawal of 1.1.1.0/24 - a range that belongs to Cloudflare - and the announcement of a hijacked route to Tata Communications India (AS4755). This unauthorized announcement propagated through Tata’s global backbone (AS6453), and rapidly spread to many networks worldwide.

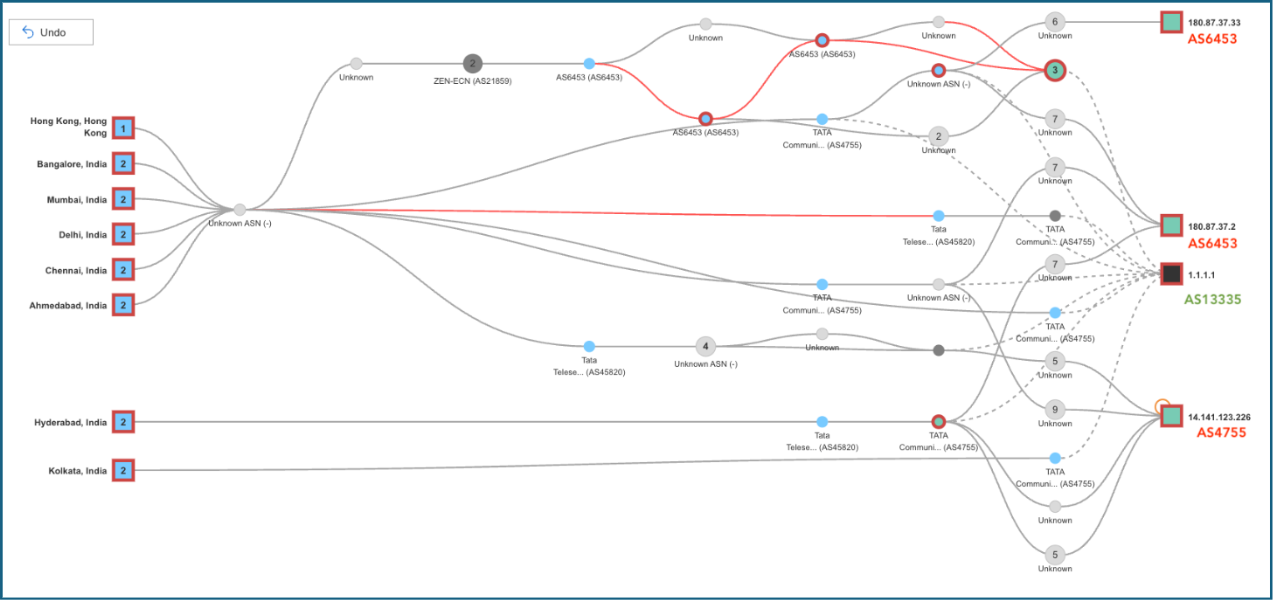

This traceroute visualization tells the full story. Each hop is grouped by its Autonomous System Number (ASN) to show how traffic flowed—or failed to.

Cloudflare’s legitimate origin ASN (AS13335) is visible where valid routes remained, but many traceroutes never made it there. A significant number of our tests failed with “no route” errors—suggesting the legitimate path was withdrawn—while others exposed the propagation of a hijacked prefix via unauthorized AS paths.

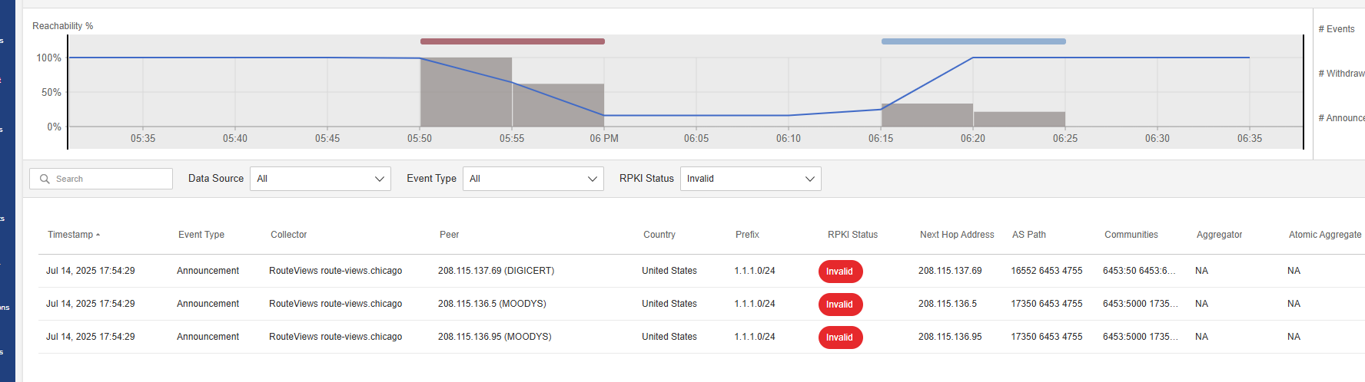

RPKI knew it was wrong. The Internet didn’t care.

While Cloudflare had a valid ROA for 1.1.1.0/24, the hijacked route originated by AS4755 was clearly marked as RPKI Invalid.

The chart here shows an example of a bunch of such invalid announcements, captured by Catchpoint’s platform—in this case, thanks to RouteViews collectors—from peers in the United States. Despite being invalid according to the ROAs, the announcements were still accepted and propagated by major networks, including AS6453.

During this period, many agents withdrew the valid route via Cloudflare’s ASN (AS13335). Some of those nodes lost reachability entirely, while others adopted the hijacked route pointing toward AS4755. Several ISPs like Lumen and AT&T had no routes at all for 1.1.1.0/24—suggesting they honored RPKI filtering and dropped the invalid announcements from Tata. However, it remains unclear why there were no other paths for 1.1.1.0/24. Under normal circumstances, we would expect to see Cloudflare’s own announcements reach these networks.

Whether it's better for a resolver to go dark or get silently rerouted through an unauthorized network is debatable—but in this case, many networks took the latter path, with consequences visible to millions of users.

Interestingly, RPKI was not able to save the day in this scenario. Cloudflare indeed was careful enough to create a proper ROA to state that the prefix hosting their DNS service can be originated only by Cloudflare AS number (AS13335), as can be seen here. Tata itself is known to apply filtering based on RPKI, as Cloudflare itself states on the Is BGP safe yet? website. However, it looks like Tata did not apply the RPKI filtering checks on routes originated by AS numbers of their own group, and the hijack/misconfiguration slipped out in the wild. Or as Murphy would say: “If anything simply cannot go wrong, it will anyway”.

Why this caused issues:

- ISPs around the world believed Tata’s AS4755 and AS6453 had a better path to 1.1.1.1.

- As a result, millions of DNS queries were misrouted—sent to the wrong networks.

- Those queries either timed out or received invalid responses, effectively cutting off users from the DNS service.

Real-world impact: What it meant for end users

The effect for end users was immediate and confusing:

- Websites didn’t load: Even though most websites and services were fully operational, users couldn’t reach them. Without DNS resolution, domain names couldn’t be translated into IP addresses, so browsers returned errors or spun endlessly.

- Apps appeared broken: Streaming platforms, messaging apps, payment gateways, and enterprise tools all rely on domain name resolution to function. When DNS failed, these services appeared to be “down” or disconnected—prompting support tickets, user complaints, and internal confusion.

- Local troubleshooting led nowhere: Many users assumed the problem was on their end. They rebooted routers, toggled Wi-Fi, or even contacted their ISP—wasting time while the root cause remained external and invisible to them.

- Reddit and DownDetector lit up—too late: With no immediate word from Cloudflare, users turned to social platforms for confirmation. Reddit threads and DownDetector entries surged—but these are reactive tools, reflecting only what people report, not what’s actually failing. They provided no diagnostics, timelines, or guidance.

Takeaways: what this outage teaches us about Internet Resilience

Cloudflare’s DNS outage is a lesson in how fragile the Internet’s routing infrastructure still is—and how easily it can be disrupted when best practices aren’t followed. Here’s what we learned.

#1 Monitor everything

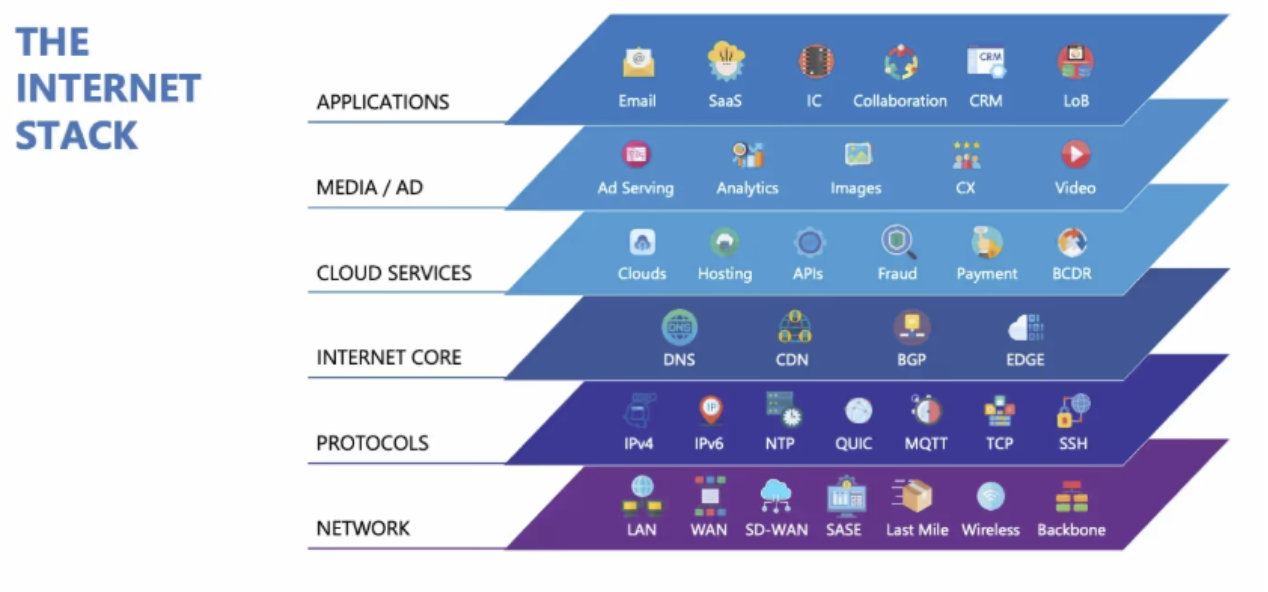

Mission-critical services depend on external systems like DNS, BGP, and transit networks. DNS especially is a single point of failure—if it breaks, nothing works, regardless of how healthy your backend is. So, if it feels like “everything on the Internet is down,” there's a good chance it’s DNS. Public resolvers like 1.1.1.1 are foundational to connectivity—and when they become unreachable, even healthy services appear broken. A good monitoring strategy includes monitoring the entire Internet Stack, including protocols BGP and DNS.

After all, your customers won’t know it’s Tata or Cloudflare’s fault. They’ll blame you.

Read: The Need for Speed – Why you should give your network’s DNS performance a closer look

#2. ISPs must enforce RPKI filtering

The route hijack that broke access to Cloudflare’s 1.1.1.1 was invalid. It should have been rejected. Networks that properly implemented filters based on RPKI-based origin validation did just that—and their users remained unaffected. No matter if there’s no other way to get to the destination—if the destination is clearly hijacked, you should never take that road. Filtering invalid routes is no longer optional; it's table stakes for a resilient Internet

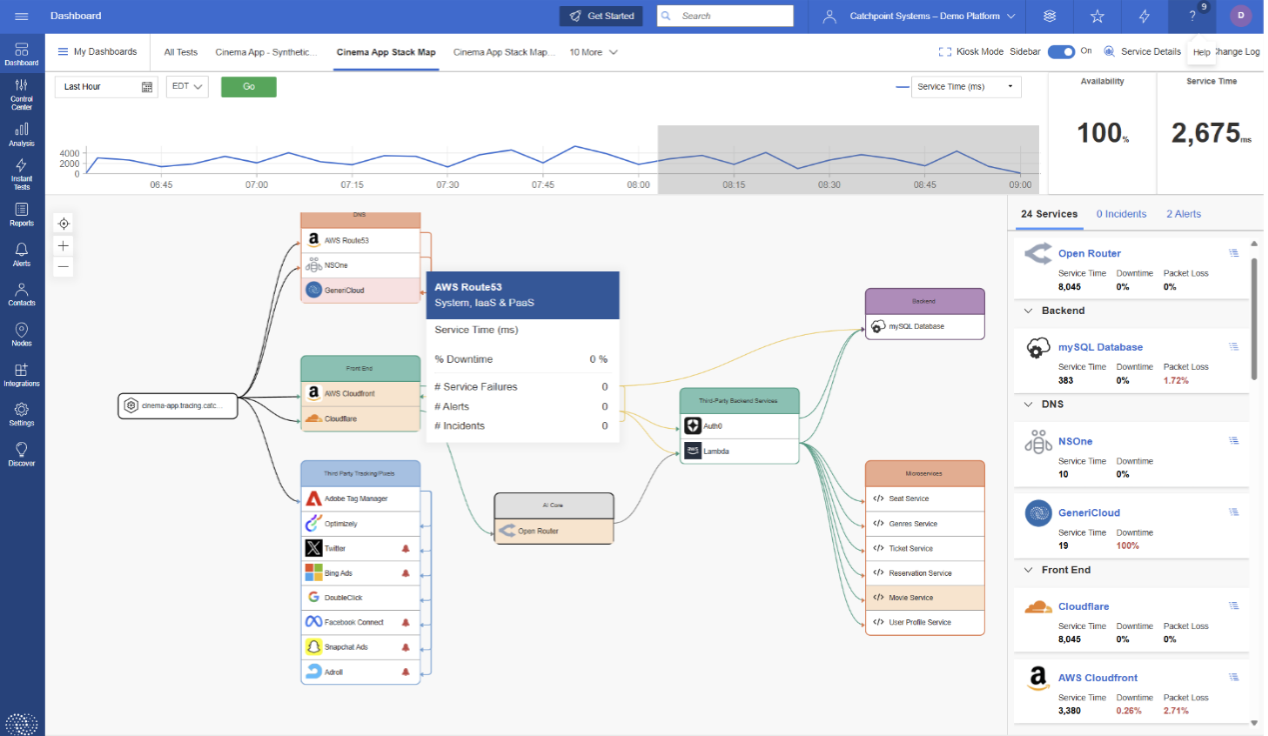

#3 Early detection is everything

Outages unfold fast. To get ahead of them, organizations need proactive Internet Performance Monitoring (IPM) powered by AI tools like Internet Sonar and Internet Stack Map. Together, they enable you to swiftly answer the question: “Is it me, or something else?” In the case of the Cloudflare outage, Sonar flagged the issue 23 minutes before Cloudflare posted on their status page—a window that’s invaluable when service continuity and SLAs are on the line.

#4. Don’t depend on social signals like Reddit or DownDetector

By the time these platforms light up, the damage is already done. Social chatter is reactive, fragmented, and often misleading. Use data-driven monitoring tools to spot outages before your users do—not after they’ve started complaining.

Read: Is your cloud provider telling you everything, everything, all at once?

#5. Always specify a backup DNS resolver

And if you're an end user? Always specify a backup DNS resolver from a different provider. For example, if your primary is Cloudflare (1.1.1.1), set Google (8.8.8.8) as your secondary. It’s a simple step that can keep you online even when one DNS service hits trouble.

Conclusion

The Cloudflare 1.1.1.1 outage wasn’t caused by DNS infrastructure failure—but by a breakdown in the trust-based fabric of Internet routing. A single misconfigured BGP announcement from one network, improperly validated and widely propagated, was enough to make a critical service unreachable for millions.

This incident is a reminder that resilience isn’t just about uptime—it’s about visibility, validation, and vigilance across the entire Internet Stack. Whether you operate a global network or rely on one, protecting users from outages like this means doing the hard work: enforcing RPKI, monitoring beyond your perimeter, and responding to failures with precision—not guesswork. There’s still more to understand about how this incident unfolded. We look forward to a detailed RCA from Cloudflare to help the broader community learn from it

Because sometimes, it’s “always DNS” …until it isn’t.