Charles Conley

IT Ops Manager

Know when networks, APIs, LLMs or dependencies fail your AI agents

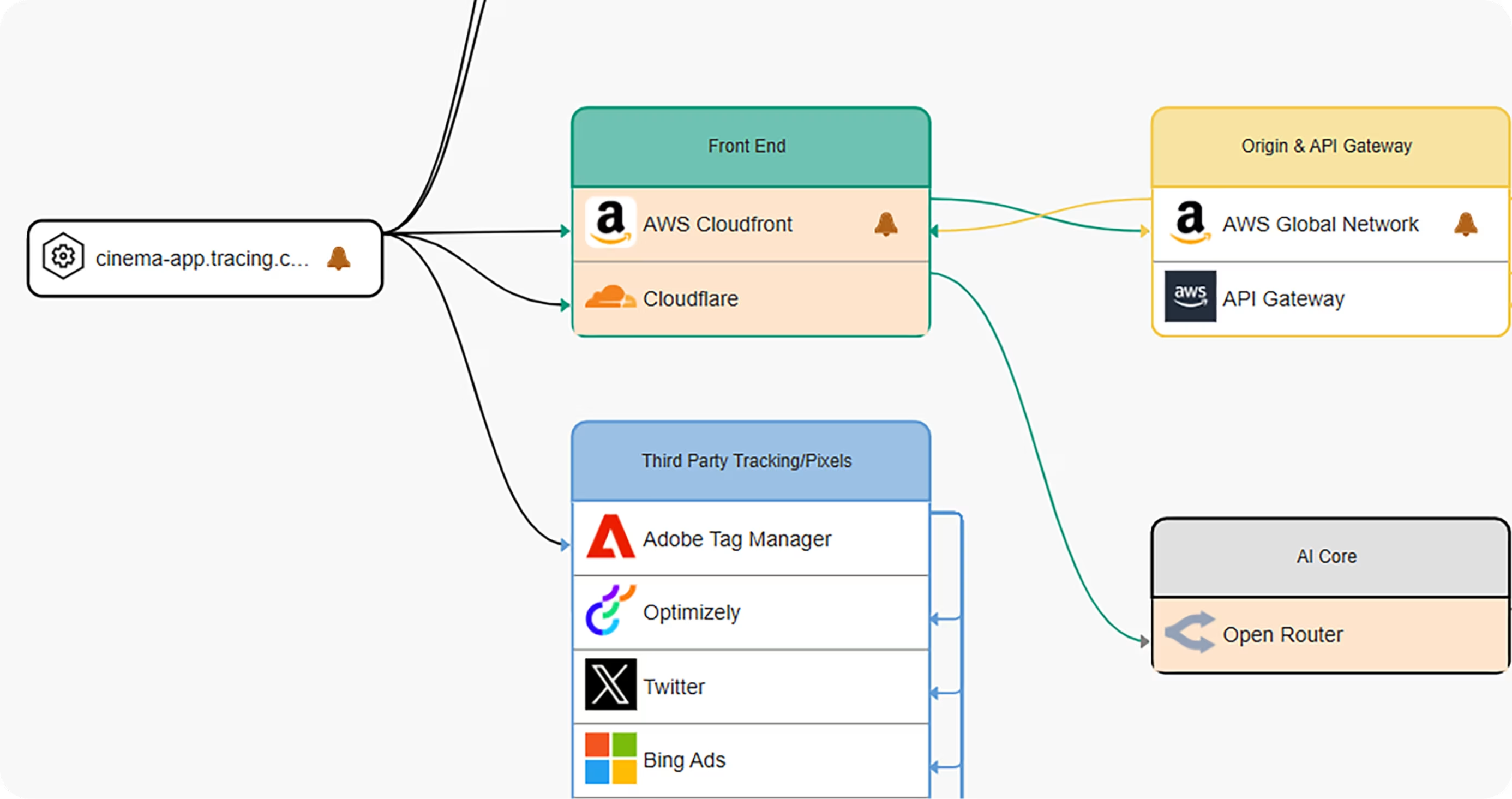

Autonomous AI meshes don't just rely on a single API—they depend on a complex web of cloud services, APIs, LLM endpoints, vector databases, SaaS tools, and the global internet. When DNS fails, when a third-party API goes down, or when a BGP route hijacks access to a model, your AI agent stalls—or worse, behaves unpredictably. Observability for Agentic AI means understanding not just the AI, but every external dependency and internet component that enables it.

AI chains tasks across APIs, models, and data sources. A DNS failure, API outage, cloud region disruption, or CDN issue isn’t just a blip—it’s a broken agent workflow. Without observability into external networks and third-party components, teams can’t diagnose failures, mitigate risks, or restore service quickly. IPM, DEM, and Synthetics give full-stack visibility into the internet dependencies your agents can’t live without.

Detect DNS failures, CDN misconfigurations, API availability, latency and stability or BGP hijacks that prevent agents from reaching models or critical data sources globally. Spot failures in SaaS tools, cloud APIs, or vector DBs before workflows collapse

Track the response time, latency, stability and token usage of the LLMs that your organization depends on.

Detect outages for AI assistants such as Microsoft CoPilot, OpenAI ChatGPT, Google Gemini, X Grok, Perplexity, IBM WatsonX, and more. If you're not monitoring AI endpoints, you're flying blind. Failures in DNS, APIs, network routes, or AI responses often surface as customer complaints. Internet Performance Monitoring, Digital Experience Monitoring, and Synthetic Testing give you proactive visibility—so you can fix problems before they affect revenue, trust, or user experience.

.avif)

Charles Conley

IT Ops Manager

When AI agents stall, hallucinate, or fail to complete tasks, the root cause often hides outside the model: in broken APIs, network outages, or unreachable services. Without visibility into this internet-scale stack, teams waste time debugging the AI while the real problem is DNS, routing, or a third-party outage. Monitoring external dependencies is critical to delivering reliable autonomous AI.

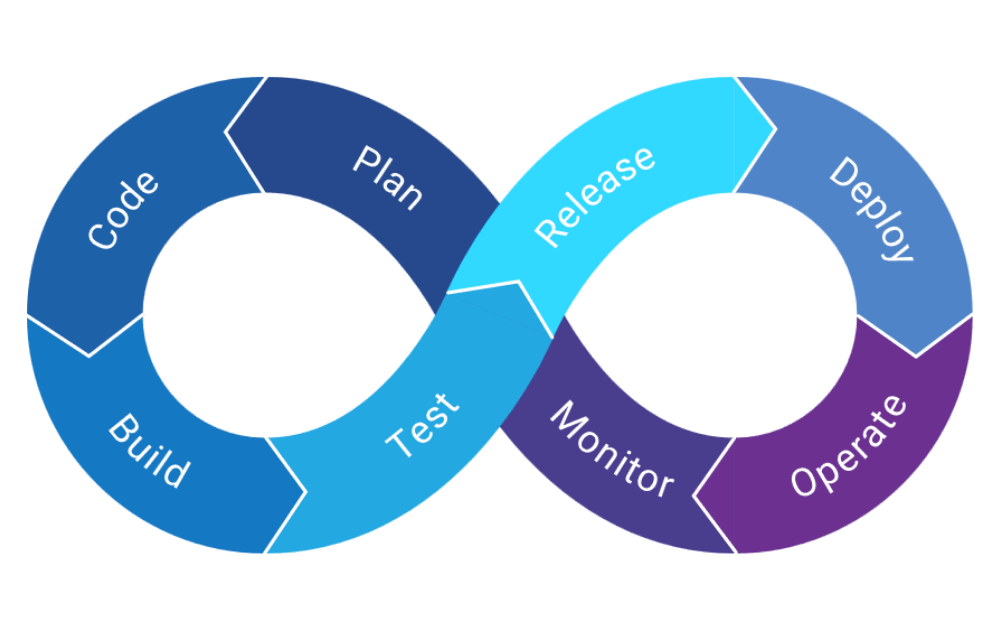

Integrate observability into your pipelines. Automatically CRUD monitors as agents, APIs, or AI models are pushed to production. Ensure every change to your AI stack is tested for reachability, latency, and availability—without manual setup.

Detect latency, outages, or failures tied to specific cloud regions. Ensure your agents don't get stuck waiting on broken infrastructure.

Trace multi-step workflows between agents, APIs, and models. Identify where slowdowns, outages, or errors cause cascading failures.