SRE Report Retrospectives — Have AIOps Predictions Held Up?

Welcome to a new blog series where we take a candid look at the predictions, insights, and bold claims we've made in previous SRE Reports and ask the uncomfortable question: How did we do?

For the uninitiated, Catchpoint's SRE Report is our annual, practitioner-driven effort to capture the pulse of the global reliability community. Each year, we survey SREs, reliability engineers, and operations leaders worldwide to understand the current state of our profession, including the challenges we face, the tools we use, and the trends shaping our work. The reports serve as both a snapshot of where we are and a compass for where we're heading.

But here's the thing about predictions: they have this pesky habit of aging poorly. Technology evolves, markets shift, and what seemed revolutionary three years ago might be gathering dust in the corner of someone's Kubernetes cluster today. So we thought it would be fascinating, and perhaps a little humbling, to revisit our past claims and see how they've held up against the brutal reality of production environments.

First up in this retrospective series: AIOps. Buckle up, because this journey is equal parts vindication and "well, that didn't age well."

AIOps: The Promise That Wouldn't Die

Let's start with a bit of history. The term "AIOps" was coined by Gartner in 2017, defined as the application of artificial intelligence and machine learning to enhance IT operations through big data, analytics, and automation. Gartner positioned it as the future of IT operations—a way to handle the exponential growth in data volume, velocity, and variety that traditional monitoring tools simply couldn't manage.

The promise was intoxicating: imagine AI systems that could automatically detect anomalies, correlate events across your entire stack, predict failures before they happened, and even remediate issues without human intervention. It sounded like the holy grail of operations—finally, we could move from reactive firefighting to proactive, intelligent infrastructure management.

What we said then: the SRE Report take on AIOps

Our 2021 SRE Report gave AIOps significant attention, reflecting the industry enthusiasm we were seeing everywhere. The potential was undeniable. AIOps promised to help us manage increasing data volumes and extract actionable insights that could transform how we approached monitoring and incident response.

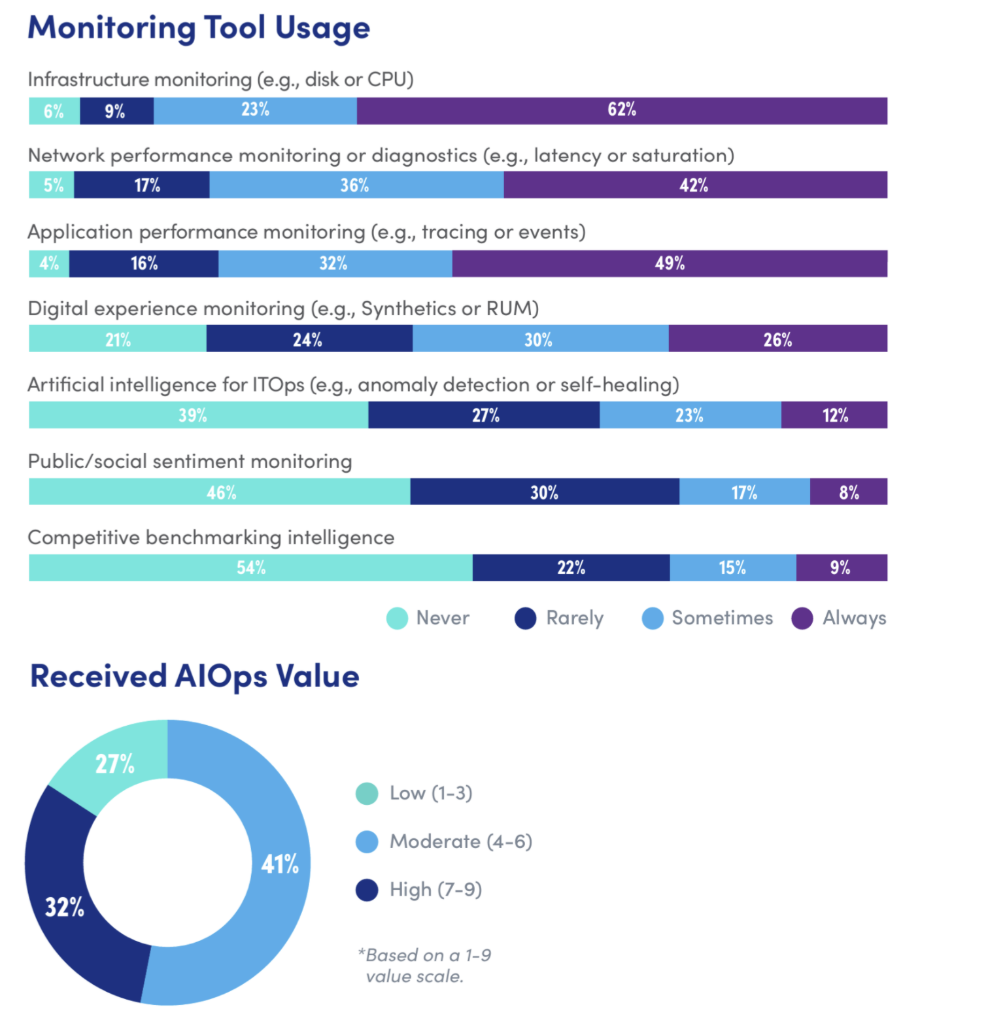

But while the industry was buzzing with excitement, our survey respondents told a different story. Real-world adoption among SREs was surprisingly slow. The gap between promise and practice was... significant.

Our recommendation then was pragmatic: break down AIOps into individual components rather than chasing the buzzword. Don't buy into the hype wholesale. Instead, evaluate specific capabilities like anomaly detection, event correlation, or automated remediation on their own merits. We also emphasized the need for AI and ML training within SRE teams, positioning this as a long-term investment rather than a quick fix.

2023: The Plot Thickens

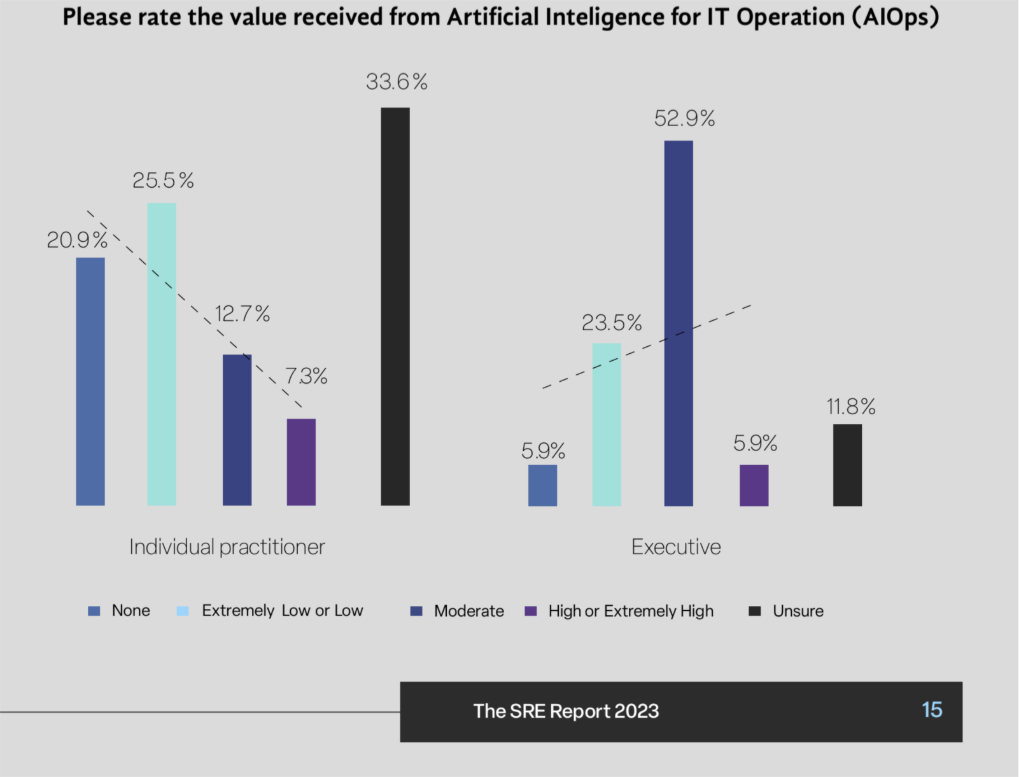

By the time our 2023 SRE Report rolled around, we had a year of additional data to work with. We asked respondents to rate the "value received" from AIOps for a second year running, and the results were... illuminating.

The majority of reliability practitioners continued to report that the value they received from AIOps was low or nonexistent. But when we broke down responses by organizational level, a fascinating pattern emerged. 59% of executives said they received moderate or high value from AIOps, while only 20% of individual practitioners said the same.

Read that again. Let it sink in.

We had a classic case of the people making the purchasing decisions seeing tremendous value, while the people actually using the tools in production were largely unimpressed. The gap in perception between leadership and practitioners was huge!

Our advice remained consistent: don't ignore AIOps entirely, but decompose it into specific capabilities that meaningfully support your observability and reliability operations. Focus on pragmatic use cases, not vendor promises.

2024-2025: The pivot

In 2024 we broadened our survey questions from "AIOps specifically" to "AI in general," adding qualifiers about expectations "within the next two years." This shift reflected the rapidly evolving AI landscape and the rise of generative AI.

As one of our field contributors noted: "It's hard to know whether this is another AI hype cycle or an intensification of the previous one, but it feels like there is something genuinely different between the (rather short on detail) promotion of AIOps, and what's happening with GenAI."

The distinction was crucial: traditional AIOps remained narrowly focused on anomaly detection and analysis within existing command-and-control frameworks—essentially "business-as-usual, with go-faster stripes." GenAI, however, represented something fundamentally different: "more like dealing with a very early-stage co-worker, who needs training and investment and constant review, but can occasionally be really valuable."

That was then, this is now: the Google Trends reality check

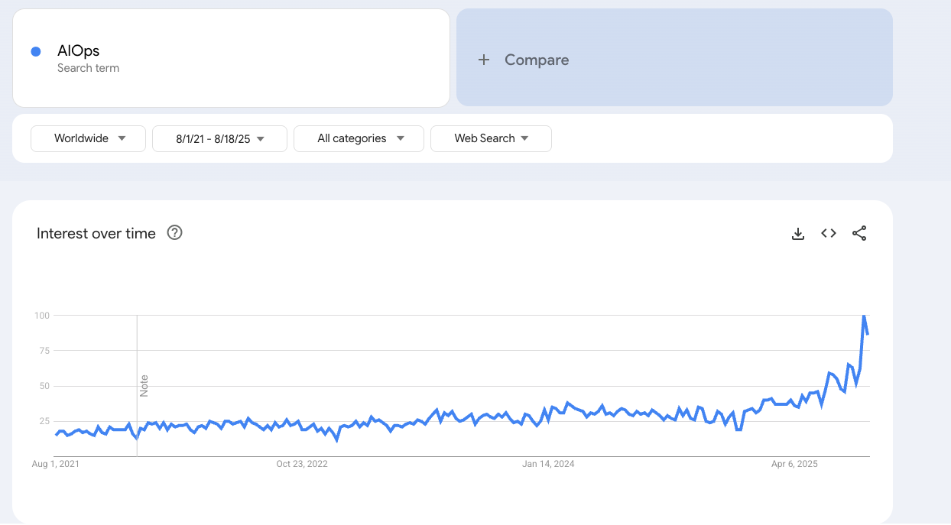

So that was our take from the SRE trenches. But how do you actually measure adoption of something like AIOps in the broader market? It's crude, but you could do worse than looking at Google search trend data.

Why Google Trends? Two compelling reasons:

- First, it tracks real search interest, not just hype. Google Trends shows how many people are actively searching for information on a topic, a direct window into what the market, professionals, and the curious want to learn or evaluate.

- Second, it's unbiased and vendor-neutral. Unlike vendor surveys or analyst reports, Trends is independent. It's not produced by a stakeholder seeking to sell or promote something. It reflects organic search behaviors from millions of users across the world.

So, what do we learn from Google’s search trend data?

The AIOps search interest explosion (2024-2025)

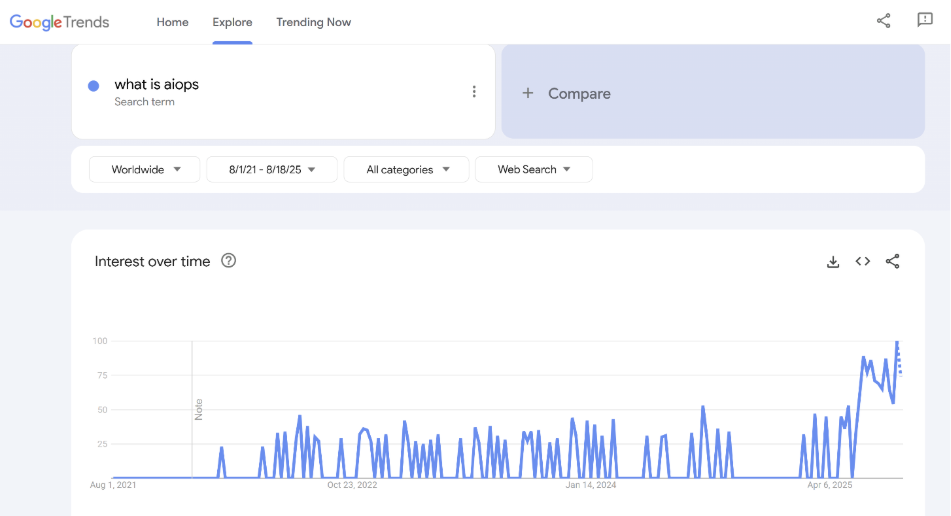

The most striking finding is the dramatic spike in "AIOps" searches starting in late 2023/early 2024, reaching peak interest by 2025.

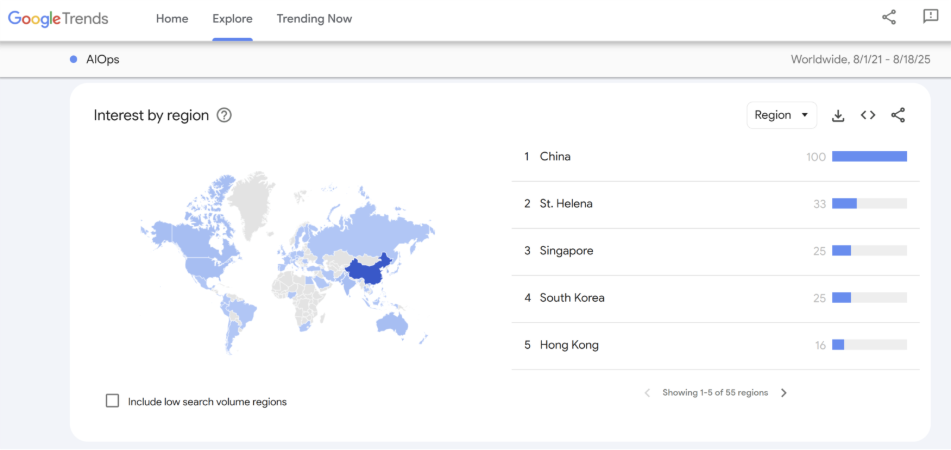

Regional concentration in Asia-Pacific

The geographic data reveals AIOps interest is heavily concentrated in:

- China (100% relative interest)

- Singapore, South Korea, Hong Kong (13-21% relative interest)

This suggests either different IT infrastructure challenges in APAC markets, varying technology adoption patterns, or perhaps different expectations around AI-driven operations.

The educational curve

The "what is AIOps" search trend shows cyclical spikes rather than sustained growth, suggesting periodic waves of discovery rather than consistent adoption. People are still learning about AIOps rather than implementing it—the concept remains nascent despite years of industry discussion.

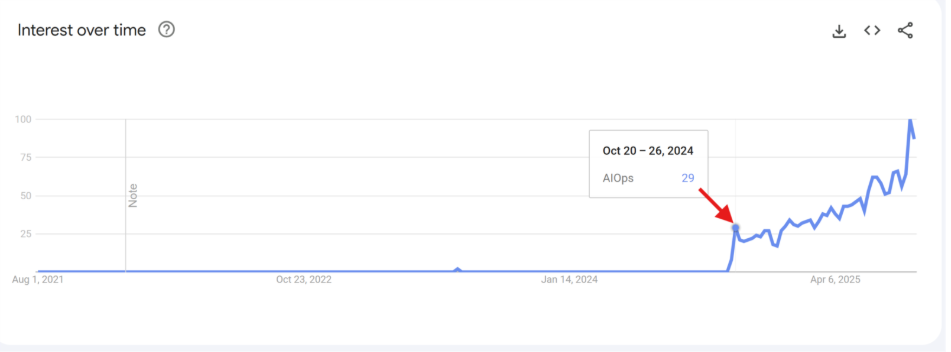

The October 2024 inflection point

But here's where the story gets really fascinating. Search interest for AIOps absolutely exploded in October 2024.

What happened?

The perfect storm

October 2024 created a perfect storm for AIOps interest:

- Gartner Magic Quadrant for Digital Experience Monitoring (October 21, 2024): For the first time ever, Gartner published a Magic Quadrant for Digital Experience Monitoring, which includes AIOps capabilities as evaluated criteria. Companies like Dynatrace and Catchpoint were named Leaders, generating significant industry attention and validating the AIOps space.

- IBM Cloud Pak for AIOps v4.7 Release (October 11, 2024): IBM announced a major update with production-ready Linux deployment capabilities, signaling enterprise readiness.

- Nokia's AIOps Integration (October 18, 2024): Nokia integrated AI-driven operations into its Altiplano Access Controller, showing AIOps expanding beyond traditional IT into network infrastructure.

- ServiceNow Educational Push: Dedicated AIOps workshops and training, indicating vendors were investing heavily in market education.

- Multiple Vendor Milestones: From Motadata's next-gen platform to Keep raising $2.7M for their open-source AIOps platform, the entire ecosystem seemed to mature simultaneously.

This convergence explains why Google Trends shows such a dramatic spike. October 2024 represented the moment when AIOps moved from "emerging technology" to "mainstream enterprise solution" with multiple validation points occurring simultaneously.

And what does the market actually say?

The numbers are impressive: the global AIOps market is expanding at a compound annual growth rate of more than 25%, projected to grow from $11.16B in 2025 to over $32B by 2029. Around 40% of enterprises now employ AIOps to some extent, with adoption rates especially high in regulated and data-intensive industries.

But here's the million-dollar question: are we seeing hype cycles that don't align with practitioner reality, or is the early promise of AIOps finally materializing?

Putting it all together: what we got right (and what we missed)

Our 2021 and 2023 recommendations still hold water:

- Break down AIOps into discrete components: The market has largely validated this approach. Successful AIOps implementations focus on specific capabilities, such as anomaly detection, event correlation, and automated remediation, rather than attempting to solve everything at once.

- Focus on pragmatic use cases: Organizations seeing value from AIOps are those that identified clear, measurable problems and applied AI/ML tools strategically to solve them.

- Invest in training: The most successful teams we've observed have invested in AI and ML literacy for their SRE teams, treating it as a long-term capability rather than a silver bullet.

What we perhaps underestimated was the patience required for the market to mature and the role that broader AI developments (particularly GenAI) would play in legitimizing and advancing AIOps capabilities.

What’s Next: SRE and AI at an inflection point

The 2026 SRE Report, coming in January, will carry forward the story of AIOps and reveal how AI itself is changing the DNA of site reliability. Without revealing too much, we can share that sentiment around AI in SRE has shifted dramatically, and the numbers will surprise you. Is 2026 poised to be the year where belief in AI moves beyond theory? Watch this space, and subscribe to our email insights to get the report first.

Summary

AIOps quickly became one of the most debated and transformative concepts in IT operations, but reality didn’t match the initial hype. While industry leaders embraced it, frontline SREs remained skeptical. So did those early predictions hold up? The answer: it’s complicated.