SRE Report: AI optimism and the economics of effort

For eight years, the survey behind the SRE Report has used a consistent methodology. That consistency allows us to track how reliability work evolves over time, rather than relying on snapshots.

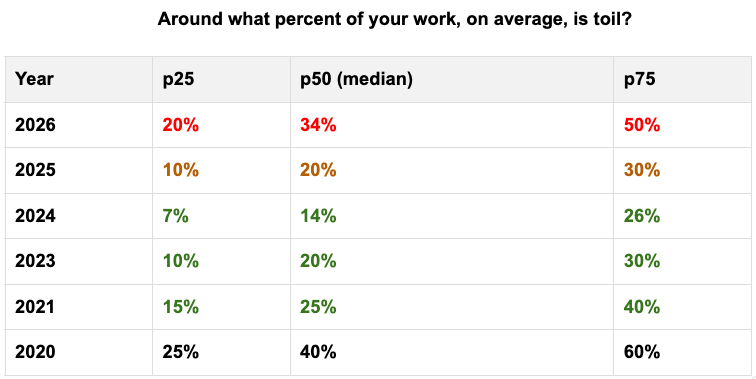

One of the most stable questions in the survey asks respondents to estimate how much of their work, on average, is spent on toil.

Between 2020 and 2024, responses showed a gradual decline in reported toil. That trend aligned with broader shifts in how teams worked during and after the COVID period, including changes in operating models and reduced manual intervention.

In 2025, that trend reversed. Reported toil increased for the first time in five years.

At the time, we referenced a hypothesis from Google’s DORA research suggesting that AI may accelerate value realization, with teams then filling regained capacity with additional operational work rather than eliminating it entirely. Going into this year’s analysis, we were interested to see whether that change was temporary.

The data suggests it was not.

In the 2026 report, median reported toil increased again, rising to 34% compared with 20% the previous year.

At face value, this result appears to sit uncomfortably alongside the growing presence of AI in day-to-day reliability work. Many respondents report widespread use of AI-assisted tooling across alerting, diagnosis, and remediation. Expectations of impact have risen sharply.

However, the relationship between AI adoption and toil is more nuanced than a simple before-and-after comparison would suggest. As you’ll see, much depends on who’s asking and how clearly teams can observe what AI is actually doing.

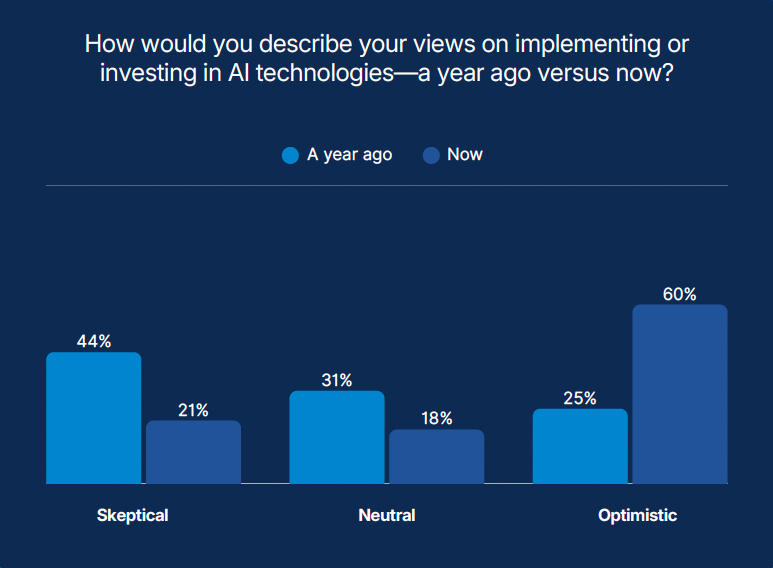

Sentiment toward AI changed substantially

Positive outlooks strengthened, indicating a clear inflection.

This shift is not surprising. Over the past year, AI tools have become more accessible, more capable, and more integrated into existing workflows. For many teams, AI is no longer experimental. It is present in everyday operations.

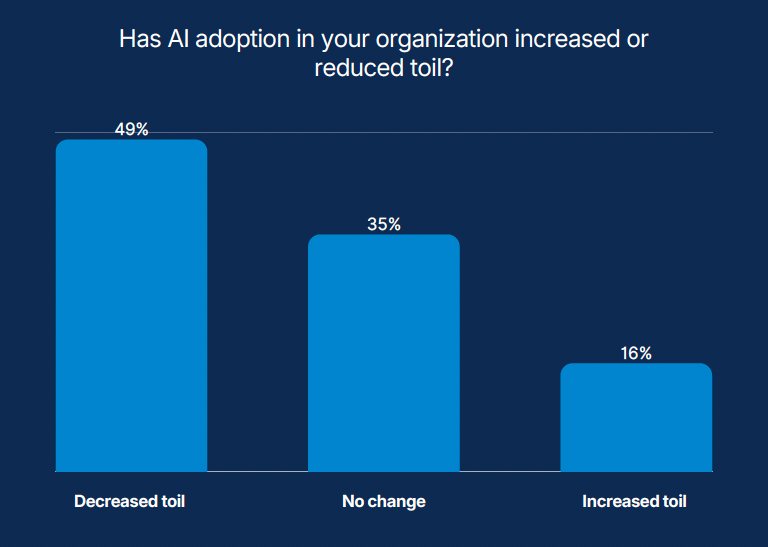

However, when we asked if AI adoption has increased or reduced toil, the answers were uneven.

- 49% say AI has decreased toil.

- 35% say there has been no change.

- 16% say toil has increased.

The commentary sums it up neatly, “AI does not remove toil automatically; it redistributes it.” Some repetitive tasks get faster or disappear. New ones appear around the edges: maintaining AI tools, reviewing their suggestions, tuning prompts, checking that actions taken by or with AI are correct, and explaining those actions to others.

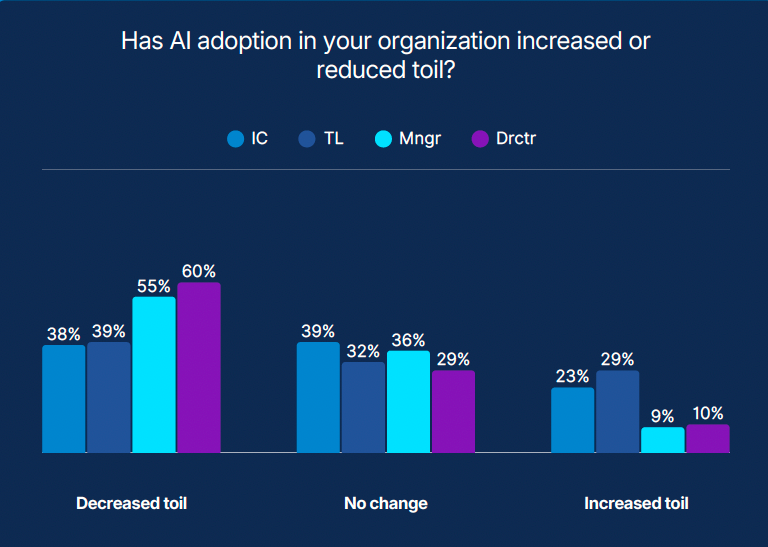

The picture becomes sharper, however, when you look at roles.

Toil outcomes vary sharply by role

Here’s what happened when we segmented toil data by role.

Respondents in leadership and management positions are more likely to report that AI has reduced toil. Individual contributors are more likely to report that toil has stayed the same or increased.

It’s important to note that his gap does not imply that one group is wrong. It usually means they are looking at different parts of the picture.

Directors and managers* feel gains when incident reports are clearer, dashboards load faster, or summaries appear automatically. They see shorter meetings, simpler handoffs, and less time spent chasing information. Individual contributors feel the friction of running new tools, checking results, plugging outputs into existing workflows, and recovering when an automated step acts on incomplete data.

Both views can be true at the same time. AI can reduce toil at the planning and coordination level while adding work at the keyboard if the surrounding systems are not ready for it.

The split in perceptions starts to make more sense when you ask a simpler question: how sure are teams that their AI is behaving reliably in the first place?

Confidence in AI reliability remains limited

We asked respondents how confident they feel in their ability to assess and monitor the reliability of AI and ML components in their systems.

The answers were cautious.

Only 13% describe themselves as very or extremely confident in their ability to monitor AI reliability.

This result matters less as a judgment and more as context. Many teams are adopting AI quickly, but far fewer feel they can clearly observe how AI-driven components behave once they are in production.

That lack of confidence can change how work feels.

When engineers cannot easily see why an AI-assisted decision was made, or how it flowed through a system, time shifts from execution to verification. Outputs are checked more carefully. Results are replayed or revalidated. Incidents take longer to explain, even when they are resolved quickly.

From the outside, this looks like toil persisting. From the inside, it often feels like caution.

How monitoring shapes AI’s impact

This may help explain why role‑based perceptions differ. People further from day‑to‑day execution are more likely to experience AI through the results that monitoring surfaces: summaries appear faster, patterns surface sooner, coordination improves, and the system feels easier to reason about.

People closer to the work experience AI through its behavior and the gaps in visibility. They see where signals are missing, where dependencies are unclear, and where automated actions require follow‑up. When visibility is incomplete, AI adds another layer that has to be interpreted rather than trusted outright.

AI raises the bar for observability

AI delivers the greatest impact when telemetry is clean, complete, and connected, but most teams are not there yet. When observability foundations are strong, AI can accelerate understanding, reduce effort, and improve reliability outcomes at scale. Unlocking that value requires broader and higher‑quality telemetry across experience, delivery paths, and systems, so automation and insight are built on signals that can be trusted.

Teams with broader visibility across user experience, delivery paths, and system dependencies tend to report more confidence in AI’s impact, while teams with narrower visibility report more mixed results and more manual checking. This does not imply that visibility guarantees better outcomes, but it does suggest that confidence grows when teams can see how AI fits into the system as a whole, rather than treating it as a separate capability.

Learn more

- Read the full SRE Report 2026

Review the full dataset and analysis on how reliability practices, performance expectations, and operational effort are evolving. - See how teams monitor AI stack resilience

Learn how AI stack resilience monitoring helps teams assess the reliability of AI-driven systems across infrastructure, dependencies, and delivery paths.

* IC = individual contributor, TL = team lead or lead, Mngr = equal manager, Drctr = director

"practitioners" = IC + TL

"management" = mngr + drctr