What Can We Learn from AWS’s December Outagepalooza?

Top takeaways and lessons learned from the December 2021 AWS outages

2021’s slew of Internet outages or disruptions show how connected and relatively fragile the Internet ecosystem is. Case in point: December’s trifecta of Amazon Web Services (AWS) outages, which really brought home the fact that no service is too big to fail:

- 12/07/2021. Millions of users were affected by this extended outage originating in the US-EAST-1 region, which took down major online services such as Amazon, Amazon Prime, Amazon Alexa, Venmo, Disney+, Instacart, Roku, Kindle, and multiple online gaming sites. The outage also took down the apps that power warehouse, delivery, and Amazon Flex workers—in prime holiday shopping season. The AWS status dashboard noted that the root cause of the outage was an impairment of several network devices.

- 12/15/2021. Originating in the US-West-2 region in Oregon and US-West-1 in Northern California, this incident lasted about an hour and brought down major services such as Auth0, Duo, Okta, DoorDash, Disney, the PlayStation Network, Slack, Netflix, Snapchat, and Zoom. According to the AWS status dashboard, "The issue was caused by network congestion between parts of the AWS Backbone and a subset of Internet Service Providers, which was triggered by AWS traffic engineering, executed in response to congestion outside of our network.”

- 12/22/2021. This incident was triggered by a data center power outage in the U.S.-EAST-1 Region, causing a cascade of issues for AWS customers such as Slack, Udemy, Twilio, Okta, Imgur, Jobvite and even the NY Court system web site. Although the outage itself was relatively brief, related effects proved vexingly persistent, as some AWS users continued to experience problems related to the issue up to 17 hours later.

The reality is, the next outage is not if, but when, where, and for how long. Pretending they don’t exist or won’t happen is not only pointless but harmful to your business. Looking back at the three December outages, we see four key takeaways:

1. Early detection is key to handling outages like the AWS incidents.

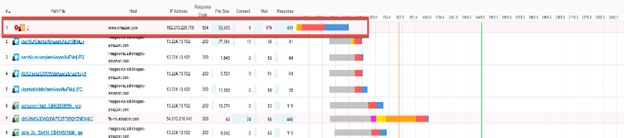

Catchpoint observed all three outages well before they hit the AWS status page:

- 12/7/2021: Here at Catchpoint, we observed connectivity issues for AWS servers starting at 10:33 AM ET, considerably earlier than the announcement posted to the AWS Service Health Dashboard at 12:37 PM EST.

- 12/15/2021: Catchpoint noted the outage at approximately 10:15 AM ET, once again before the AWS announcement at about 10:43 AM ET.

- 12/22/2021: Catchpoint first observed issues at 07:11 AM ET, 24 minutes ahead of the AWS announcement.

Early detection allows companies to fix problems potentially before they impact customers and implement contingency plans to ensure smooth failover as soon as possible. If the issue continues, it also allows them to proactively inform customers with precise details about the situation and assure them that their teams are working on it.

2. Comprehensive observability helps your team react at speed to outages.

While it may be tempting to leave AWS monitoring to, well, AWS, that could leave you in the dark, observability-wise. A comprehensive digital observability plan should include not only your own technical elements, but also service delivery chain components that are not within your control. For example, you need insight into the systems of third-party vendors such as content delivery networks (CDNs), managed DNS providers, and backbone Internet service providers (ISPs).

While these might not be your code or hardware, your users will still be impacted by any issues they experience, and so will your business. If you are observing your end-to-end experiences, you can act when things that are out of your control are impacting your users.

It also means continuous observation of your systems to detect failure of fundamental components such as DNS, BGP, TCP configuration, SSL, the networks the data traverses, or any single point of failure in the infrastructure that we rarely change.

This issue is exacerbated by the fact that cloud has abstracted a lot of the underlying network from development, operations, and network teams. That can make it harder to find a problem.

As a result, it can catch us by surprise when these fundamental components fail. If teams are not properly prepared, it adds needless — and costly — time to detect, confirm, or find root cause. Therefore, ensure that you continuously monitor these aspects of your system and train your teams on what to do in case of failure.

3. Ensuring your company’s availability and business continuity is not a solo endeavor.

The AWS incidents all clearly illustrate the downstream effect that an outage at one company can have on others. Digital infrastructure will assuredly continue to grow more complex and interconnected. Enterprises today run systems that run across multiple clouds. They also rely on multiple teams, often including a raft of other vendors, such as cloud compute, CDNs, and managed DNS. When issues originating with outside entities such as partners and third-party providers can bring down your systems, it is time to build a collaborative strategy designed to support your extended digital infrastructure. For that, comprehensive observability into every service provider involved in the delivery of your content is crucial.

4. Depending on only a monitoring solution hosted within the environment being monitored is not enough.

While there are many monitoring solutions out there, make sure that you have a "break glass system" to be able to failover to a solution outside of the environment being monitored. Many visibility solutions are located in the cloud, which makes them vulnerable when cloud technologies go down.

This is why ThousandEyes, Datadog, Splunk (SignalFX), and NewRelic all reported impacts from the 12/07/21 and 12/15/21 events.

During the first event, Datadog reported delays that impacted multiple products, Splunk (SignalFX) reported that their AWS cloud metric syncer data ingestion was impacted, and New Relic reported that some AWS Infrastructure and polling metrics were delayed in the U.S.

There were also a number of issues triggered by the 12/15/2021 event:

- Datadog reported delays in collecting AWS integration metrics.

- ThousandEyes reported degradation to API services.

- New Relic reported that their synthetics user interface was impacted, as well as some of the APM alerting and the data ingestion for their infrastructure metrics.

- Dynatrace reported that some of their components hosted on AWS cluster were impacted.

- Splunk (Rigor and SignalFX) reported increased error rates in the West coast of the U.S. and a degradation in the performance of their Log Observer.

Lack of observability is never a good thing, but over the course of an outage, it is significantly worse. Interestingly, AWS’s Adrian Cockroft pointed out the issue in a post on Medium, where he noted,

"The first thing that would be useful is to have a monitoring system that has failure modes which are uncorrelated with the infrastructure it is monitoring."