When metrics mislead: Inside the 2025 Retail Web Performance Benchmark

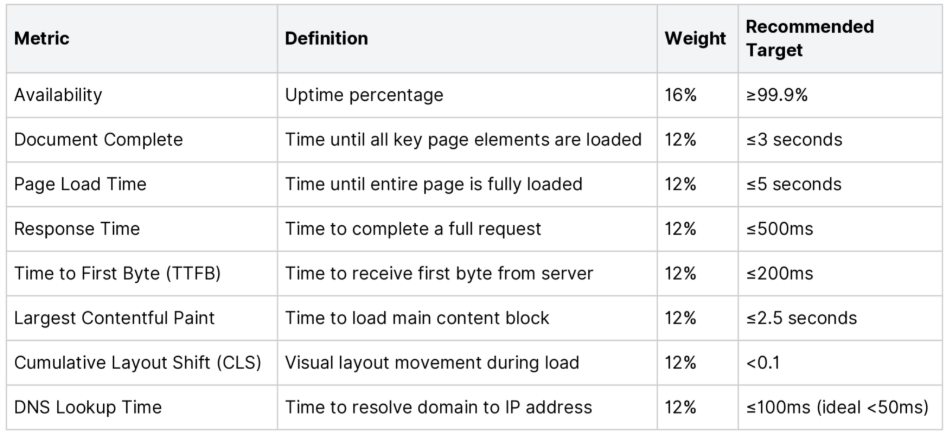

Over the past few years at Catchpoint, we’ve benchmarked the digital performance of banks, airlines, hotels, travel aggregators, GenAI platforms, athletic footwear brands, and even ad hoc events like the Super Bowl, Olympics, and Election Day. Each time, our approach focused on the technical metrics performance professionals live and breathe: DNS resolution times, Time to First Byte, page load speeds, and six other core measurements that we'd dissect, analyze, and use to rank companies.

The process was thorough, data-driven, and produced insights that resonated with our technical audience. But as we prepared our latest retail benchmark report, something didn’t sit well with this approach.

Why experience was the missing piece

Anyone who works in web performance or SRE knows this feeling: users are complaining about slow page loads, but when you check the dashboard, everything's green. Your DNS is resolving in under 50ms, TTFB looks solid, and your availability is at 99.9%. Yet somehow, real users are still having a terrible experience.

This disconnect between technical metrics and user reality was the unspoken challenge in our previous reports. Who really cared if Marriott had the highest document complete time in a two-week snapshot? The user on the street doesn't care about milliseconds or Cumulative Layout Shift values. When they press a button, they just want speed and responsiveness.

So, what if there was a way we could rank the companies we tested, not according to their technical metrics, but the actual experience they provided to end users?

What are Experience Scores?

For our new retail report, we decided to try something different. Actually, that's not quite accurate — we decided to use something we already had, something we'd been leveraging for Catchpoint customers for years. It was staring right at us in plain sight: Experience Scores.

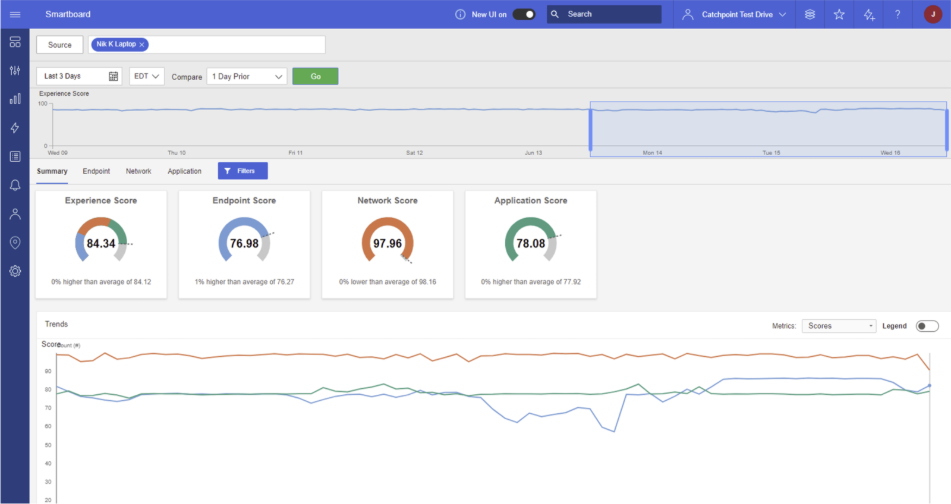

Experience Scores aggregate endpoint, network, and application insights into a single number between 0 and 100 that reflects what customers actually experience when interacting with a website or application. Unlike traditional infrastructure metrics that tell you about your systems, Experience Scores tell you about you about the experience of your users.

Made possible by our Global Agent Network, they capture the holistic picture, considering device performance variations, network quality, real-world latency, and interactive experience quality that affects user satisfaction. After all, users don’t live in the cloud, last mile conditions matter when it comes to user experience, which is a key differentiator of the Catchpoint platform. So that's exactly what we decided to do.

How did we run the study?

We tested brands from the NRF Top 50 Global Retailers list for three weeks. But we didn't just measure Experience Scores. We also captured all eight traditional performance metrics we'd always used, applying the same weighted methodology from our previous reports.

This dual approach would let us compare how traditional technical rankings stacked up against actual user experience.

Then we waited. What we found was fascinating

What did the data reveal?

When the numbers came in, the results were striking. A few highlights stood out right away:

- Revenue doesn’t guarantee speed: Some of the largest retailers still had sluggish sites.

- Excellence is rare: Only 34% of retailers earned an excellent user experience score (90+).

- Uptime ≠ user happiness: 16 retailers with 99.9% uptime still delivered poor experiences.

- Not all customers see the same web: Even in the same city, performance could differ dramatically.

You can dive into the full report to see all of the patterns we uncovered. But one finding in particular jumped out: the sharp divergence between traditional metrics and actual user experience.

How big was the gap between metrics and experience?

Over 70% of retailers showed significant ranking differences (5 or more positions) between their traditional metrics performance and their Experience Score ranking.

Let that sink in.

In other words, more than two-thirds of the retailers we tested would tell a completely different story depending on whether you looked at technical metrics or actual user experience.

Complete ranking comparison

Key

- Exp Rank: Experience Score Ranking (1 = best user experience)

- Trad Rank: Weighted Traditional Metrics Ranking (1 = best technical metrics)

- Rank Diff: Traditional Rank - Experience Rank

- Positive = Traditional metrics suggest worse performance than experience

- Negative = Traditional metrics suggest better performance than experience

Which brands looked good on paper?

Some retailers looked great on paper but gave customers a very different experience once they hit the site:

- Lululemon looked strong at #22 in the technical ranking but slid to #43 on experience—a 21-place gap. The backend stats said “all good,” but shoppers still felt the friction in the browser.

- Tesco came in #25 by technical measures yet only #40 for user experience. Even with solid LCP (1,388ms) and 99.9% uptime, users still felt delays and layout shifts that no metric alone would explain.

- Target ranked #36 in traditional metrics but fell to #42 for experience. On the surface the site was healthy, but real people still faced slowdowns and unstable pages.

Which brands punched above their weight?

On the flip side, some brands delighted customers even when their technical stats weren’t perfect:

- TJX placed #6 for user experience but only #26 by technical metrics—a 20-place positive swing that shows great front-end delivery can outweigh backend imperfections.

- Metro AG hit an Experience Score of 93 (#11) despite ranking #28 by technical measures. A TTFB of 763ms could have spelled trouble, but smart front-end design kept things smooth.

- Expert came in at #20 for user experience while technical metrics put them at #41—proof that what users feel matters more than the raw numbers.

Who delivered consistent user experience?

Across the board, consistency separated the best performers from the rest:

- Aldi, Action, and IKEA topped the user experience charts with scores of 100, 99, and 98. They weren’t always the technical frontrunners, but they delivered sites that simply felt fast and stable for real people everywhere.

- At the other end, Seven & I and FamilyMart scored just 51–55 on experience despite decent infrastructure. The lesson? Technical excellence is only half the battle—if you don’t account for regional networks and front-end design, users will notice.

What does this really mean?

The data validates something many in the performance world have long suspected: technical perfection doesn't guarantee user satisfaction.

The retailers achieving high Experience Scores despite ok traditional metrics likely succeeded through optimized critical rendering paths, effective front-end performance strategies, and superior user experience design that masked backend latency. Meanwhile, retailers with good technical metrics but poor Experience Scores were probably missing the real-world performance variations, network condition impacts, and interactive quality issues that actually affect users.

Why does this matter now?

With the global retail digital transformation market expanding at 17.6% CAGR to reach $1.04 trillion by 2032, performance isn't just a technical consideration—it's becoming a competitive differentiator. Market share is being redistributed based on digital experience quality, not just technical infrastructure quality.

The retailers that understand this distinction, that recognize user experience as the ultimate metric, are the ones positioning themselves to win.

So where does that leave technical metrics?

Of course, technical metrics remain important, but they're diagnostic tools, not the end goal. The end goal is user satisfaction.

For performance teams, this suggests a shift from purely technical KPIs to more holistic, Experience Level Objectives: metrics that directly gauge user experience and satisfaction. Instead of just tracking whether systems are up and technically fast, we need to track whether users are having good experiences.

What happens next?

Going forward, our industry benchmark reports will lead with Experience Scores while still providing the technical metric breakdowns that help teams understand what's driving user experience outcomes. Because at the end of the day, users don't care about your TTFB or DNS resolution times. They care about whether your site feels fast and responsive.

And as our retail report shows, those can be two very different things.

Read the full benchmark report

Curious how your retail brand compares?

Get a free retail assessment with one of our Internet Performance Monitoring experts to understand both your technical metrics and your actual user experience performance.